Installation and Deployment

Installation Guides

For installation instructions, please see the Installation tutorial for your platform.

Architecture

QuantRocket utilizes a Docker-based microservice architecture. Users who are unfamiliar with microservices or new to Docker may find it helpful to read the overview of QuantRocket's architecture.

License key

Activation

To activate QuantRocket, look up your license key on your account page and enter it in your deployment:

$ quantrocket license set 'XXXXXXXXXXXXXXXX'

>>> from quantrocket.license import set_license

>>> set_license("XXXXXXXXXXXXXXXX")

$ curl -X PUT 'http://houston/license-service/license/XXXXXXXXXXXXXXXX'

View your license

You can view the details of the currently installed license:

$ quantrocket license get

licensekey: XXXX....XXXX

software_license:

account:

account_limit: XXXXXX USD

concurrent_install_limit: XX

license_type: Professional

user_limit: XX

>>> from quantrocket.license import get_license_profile

>>> get_license_profile()

{'licensekey': 'XXXX....XXXX',

'software_license': {'license_type': 'Professional',

'user_limit': XX,

'concurrent_install_limit': XX,

'account': {'account_limit': 'XXXXXX USD'}}}

$ curl -X GET 'http://houston/license-service/license'

{"licensekey": "XXXX....XXXX", "software_license": {"license_type": "Professional", "user_limit": XX, "concurrent_install_limit": XX, "account": {"account_limit": "XXXXXX USD"}}}

The license service will re-query your subscriptions and permissions every 10 minutes. If you make a change to your billing plan and want your deployment to see the change immediately, you can force a refresh:

$ quantrocket license get --force-refresh

>>> from quantrocket.license import get_license_profile

>>> get_license_profile(force_refresh=True)

$ curl -X GET 'http://houston/license-service/license?force_refresh=true'

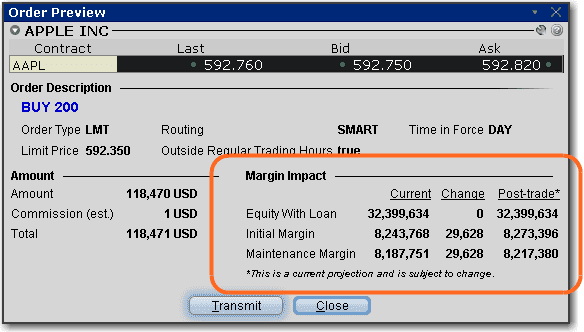

Account limit validation

The account limit displayed in your license profile output applies to live trading using the blotter and to real-time data. The account limit does not apply to historical data collection, research, or backtesting. For advisor accounts, the account size is the sum of all master and sub-accounts.

Paper trading is not subject to the account limit, however paper trading requires that the live account limit has previously been validated. Thus before paper trading it is first necessary to connect your live account at least once and let the software validate it.

To validate your account limit if you have only connected your paper account:

- Switch to your live account using the instructions for your broker

- Wait approximately 1 minute. The software queries your account balance every minute whenever your broker is connected.

To verify that account validation has occurred, refresh your license profile. It should now display your account balance and whether the balance is under the account limit:

$ quantrocket license get --force-refresh

licensekey: XXXX....XXXX

software_license:

account:

account_balance: 593953.42 USD

account_balance_details:

- Account: U12345

Currency: USD

NetLiquidation: 593953.42 USD

account_balance_under_limit: true

account_limit: XXXXXX USD

concurrent_install_limit: XX

license_type: Professional

user_limit: XX

>>> from quantrocket.license import get_license_profile

>>> get_license_profile(force_refresh=True)

{'licensekey': 'XXXX....XXXX',

'software_license': {'license_type': 'Professional',

'user_limit': XX,

'concurrent_install_limit': XX,

'account': {'account_limit': 'XXXXXX USD',

'account_balance': '593953.42 USD',

'account_balance_under_limit': True,

'account_balance_details': [{'Account': 'U12345',

'Currency': 'USD',

'NetLiquidation': 593953.42}]}}}

$ curl -X GET 'http://houston/license-service/license?force_refresh=true'

{"licensekey": "XXXX....XXXX", "software_license": {"license_type": "Professional", "user_limit": XX, "concurrent_install_limit": XX, "account": {"account_limit": "XXXXXX USD", "account_balance": "593953.42 USD", "account_balance_under_limit": true, "account_balance_details": [{"Account": "U12345", "Currency": "USD", "NetLiquidation": 593953.42}]}}}

If the command output is missing the account_balance and account_balance_under_limit keys, this indicates that the account limit has not yet been validated.

Now you can switch back to your paper account and begin paper trading.

User limit vs concurrent install limit

The output of your license profile displays your user limit and your concurrent install limit. User limit indicates the total number of distinct users who are licensed to use the software in any given month. Concurrent install limit indicates the total number of copies of the software that may be installed and running at any given time.

The concurrent install limit is set to (user limit + 1).

Rotate license key

You can rotate your license key at any time from your account page.

Connect from other applications

If you run other applications, you can connect them to your QuantRocket deployment for the purpose of querying data, submitting orders, etc.

Each remote connection to a cloud deployment counts against your plan's concurrent install limit. For example, if you run a single cloud deployment of QuantRocket and connect to it from a single remote application, this is counted as 2 concurrent installs, one for the deployment and one for the remote connection. (Connecting to a local deployment from a separate application running on your local machine does not count against the concurrent install limit.)

To utilize the Python API and/or CLI from outside of QuantRocket, install the client on the application or system you wish to connect from:

$ pip install 'quantrocket-client'

To ensure compatibility, the MAJOR.MINOR version of the client should match the MAJOR.MINOR version of your deployment. For example, if your deployment is version 2.1.x, you can install the latest 2.1.x client:

$ pip install 'quantrocket-client>=2.1,<2.2'

Don't forget to update your client version when you update your deployment version.

Next, set environment variables to tell the client how to connect to your QuantRocket deployment. For a cloud deployment, this means providing the deployment URL and credentials:

$

$ export HOUSTON_URL=https://quantrocket.123capital.com

$ export HOUSTON_USERNAME=myusername

$ export HOUSTON_PASSWORD=mypassword

$

$ [Environment]::SetEnvironmentVariable("HOUSTON_URL", "https://quantrocket.123capital.com", "User")

$ [Environment]::SetEnvironmentVariable("HOUSTON_USERNAME", "myusername", "User")

$ [Environment]::SetEnvironmentVariable("HOUSTON_PASSWORD", "mypassword", "User")

For connecting to a local deployment, only the URL is needed:

$

$ export HOUSTON_URL=http://localhost:1969

$

$ [Environment]::SetEnvironmentVariable("HOUSTON_URL", "http://localhost:1969", "User")

Environment variable syntax varies by operating system. Don't forget to make your environment variables persistent by adding them to .bashrc (Linux) or .profile (MacOS) and sourcing it (for example source ~/.bashrc), or restarting PowerShell (Windows).

Finally, test that it worked:

$ quantrocket houston ping

msg: hello from houston

>>> from quantrocket.houston import ping

>>> ping()

{u'msg': u'hello from houston'}

$ curl -u myusername:mypassword https://quantrocket.123capital.com/ping

{"msg": "hello from houston"}

To connect from applications running languages other than Python, you can skip the client installation and use the HTTP API directly.

Broker and Data Connections

This section outlines how to connect to brokers and third-party data providers.

Because QuantRocket runs on your hardware, third-party credentials and API keys that you enter into the software are secure. They are encrypted at rest and never leave your deployment. They are used solely for connecting directly to the third-party API.

Interactive Brokers

Connecting to Interactive Brokers requires an IBKR Pro account. IBKR Lite accounts do not provide API access and will not work with QuantRocket. To switch from IBKR Lite to IBKR Pro, log in to the Client Portal for your Interactive Brokers account.

IBKR Account Structure

Multiple logins and data concurrency

The structure of your Interactive Brokers (IBKR) account has a bearing on the speed with which you can collect real-time and historical data with QuantRocket. In short, the more IB Gateways you run, the more data you can collect. The basics of account structure and data concurrency are outlined below:

- All interaction with the IBKR servers, including real-time and historical data collection, is routed through IB Gateway, IBKR's slimmed-down version of Trader Workstation.

- IBKR imposes rate limits on the amount of historical and real-time data that can be received through IB Gateway.

- Each IB Gateway is tied to a particular set of login credentials. Each login can be running only one active IB Gateway session at any given time.

- However, an account holder can have multiple logins—at least two logins or possibly more, depending on the account structure. Each login can run its own IB Gateway session. In this way, an account holder can potentially run multiple instances of IB Gateway simultaneously.

- QuantRocket is designed to take advantage of multiple IB Gateways. When running multiple gateways, QuantRocket will spread your market data requests among the connected gateways.

- Since each instance of IB Gateway is rate limited separately by IBKR, the combined data throughput from splitting requests among two IB Gateways is twice that of sending all requests to one IB Gateway.

- Each separate login must separately subscribe to the relevant market data in IBKR Client Portal.

Below are a few common ways to obtain additional logins.

IBKR account structures are complex and vary by subsidiary, local regulations, the person opening the account, etc. The following guidelines are suggestions only and may not be applicable to your situation.

Second user login

Individual account holders can add a second login to their account. This is designed to allow you to use one login for API trading while using the other login to use Trader Workstation for manual trading or account monitoring. However, you can use both logins to collect data with QuantRocket. Note that you can't use the same login to simultaneously run Trader Workstation and collect data with QuantRocket. However, QuantRocket makes it easy to start and stop IB Gateway on a schedule, so the following is an option:

- Login 1 (used for QuantRocket only)

- IB Gateway always running and available for data collection and placing API orders

- Login 2 (used for QuantRocket and Trader Workstation)

- automatically stop IB Gateway daily at 9:30 AM

- Run Trader Workstation during trading session for manual trading/account monitoring

- automatically start IB Gateway daily at 4:00 PM so it can be used for overnight data collection

Advisor/Friends and Family accounts

An advisor account or the similarly structured Friends and Family account offers the possibility to obtain additional logins. Even an individual trader can open a Friends and Family account, in which they serve as their own advisor. The account setup is as follows:

- Master/advisor account: no trading occurs in this account. The account is funded only with enough money to cover market data costs. This yields 1 IB Gateway login.

- Master/advisor second user login: like an individual account, the master account can create a second login, subscribe to market data with this login, and use it for data collection.

- Client account: this is main trading account where the trading funds are deposited. This account receives its own login (for 3 total). By default this account does not having trading permissions, but you can enable client trading permissions via the master account, then subscribe to market data in the client account and begin using the client login to run another instance of IB Gateway. (Note that it's not possible to add a second login for a client account.)

If you have other accounts such as retirement accounts, you can add them as additional client accounts and obtain additional logins.

Paper trading accounts

Each IBKR account holder can enable a paper trading account for simulated trading. You can share market data with your paper account and use the paper account login with QuantRocket to collect data, as well as to paper trade your strategies. You don't need to switch to using your live account until you're ready for live trading (although it's also fine to use your live account login from the start).

Note that, due to restrictions on market data sharing, it's not possible to run IB Gateway using the live account login and corresponding paper account login at the same time. If you try, one of the sessions will disconnect the other session.

IBKR market data permissions

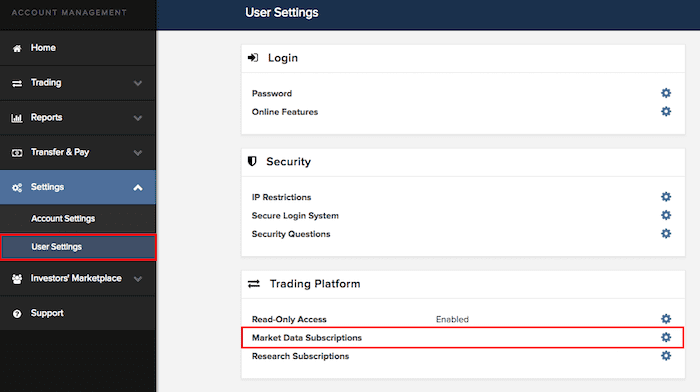

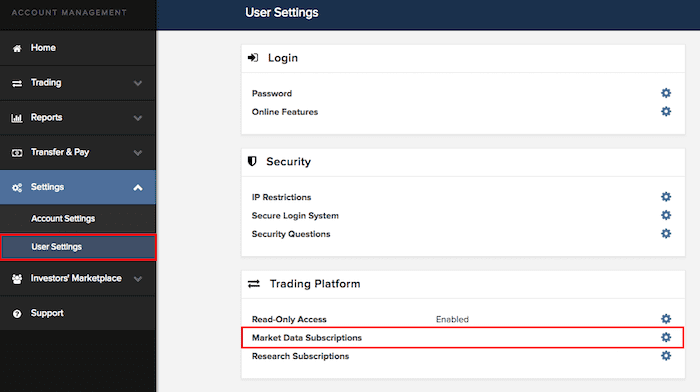

To collect IBKR data using QuantRocket, you must subscribe to the relevant market data in your IBKR account. In IBKR Client Portal, click on Settings > User Settings > Market Data Subscriptions:

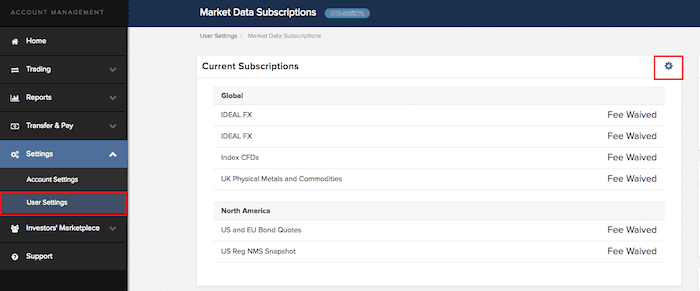

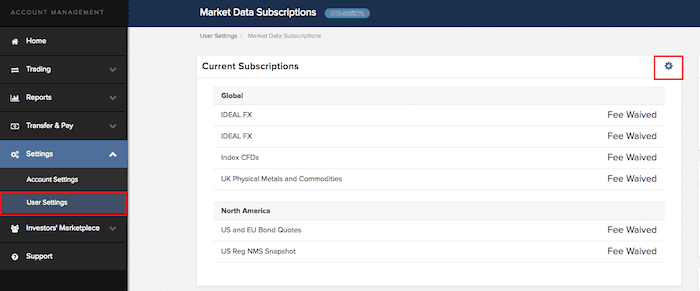

Click the edit icon then select and confirm the relevant subscriptions:

Market data for paper accounts

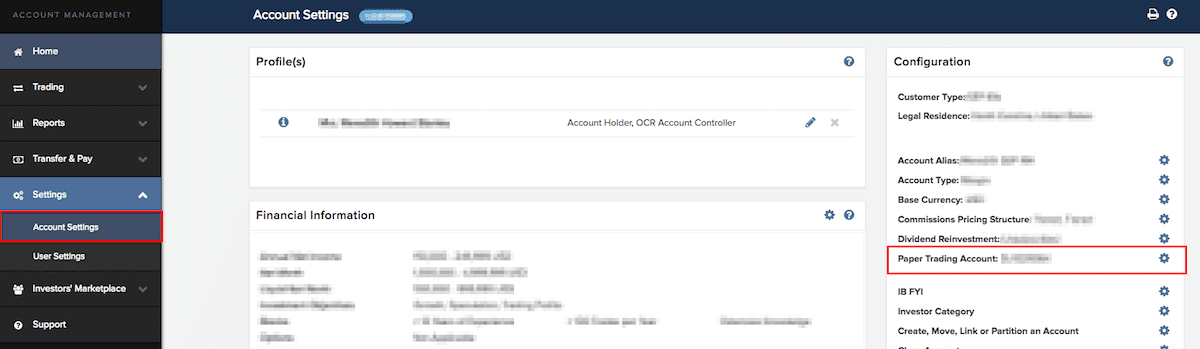

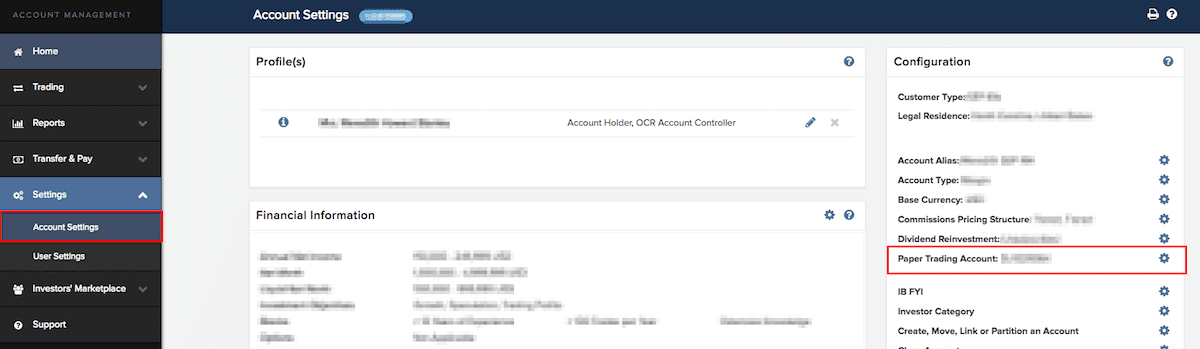

IBKR paper accounts do not directly subscribe to market data. Rather, to access market data using your IBKR paper account, subscribe to the data in your live account and share it with your paper account. Log in to IBKR Client Portal with your live account login and go to Settings > Account Settings > Paper Trading Account:

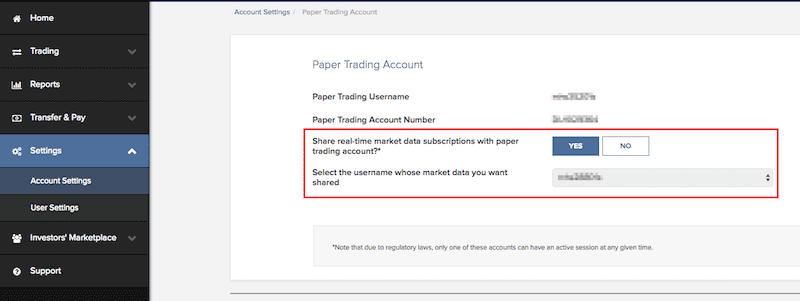

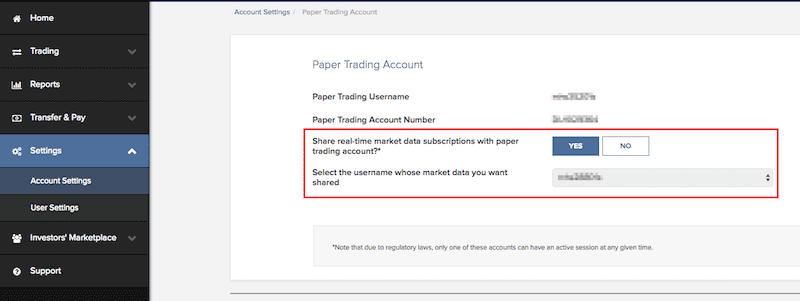

Then select the option to share your live account's market data with your paper account:

IB Gateway

QuantRocket uses the IBKR API to collect market data from IBKR, submit orders, and track positions and account balances. All communication with IBKR is routed through IB Gateway, a Java application which is a slimmed-down version of Trader Workstation (TWS) intended for API use. You can run one or more IB Gateway services through QuantRocket, where each gateway instance is associated with a different IBKR username and password.

Connect to IBKR

Your credentials are encrypted at rest and never leave your deployment.

IB Gateway runs inside the ibg1 container and connects to IBKR using your IBKR username and password. (If you have multiple IBKR usernames, you can run multiple IB Gateways.) The ibgrouter container provides an API that allows you to start and stop IB Gateway inside the ibg container(s).

The steps for connecting to your IBKR account and starting IB Gateway differ depending on whether your IBKR account requires the use of a security card at login.

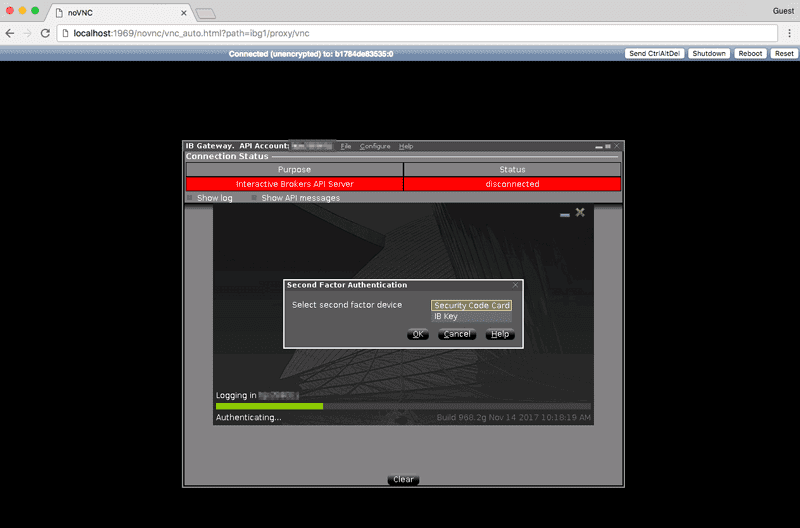

Secure Login System (Two-Factor Authentication)

For fully automated configuration and running of IB Gateway, you must partially opt out of the Secure Login System (SLS), IBKR's two-factor authentication. With a partial opt-out, your username and password (but not your security device) are required for logging into IB Gateway and other IBKR trading platforms. Your security device is still required for logging in to Client Portal. A partial opt-out can be performed in Client Portal by going to Settings > User Settings > Secure Login System > Secure Login Settings.

If you prefer not to perform a partial opt-out of IBKR's Secure Login System (SLS) or can't for regulatory reasons, you can still use QuantRocket but will need to perform two-factor authentication on your mobile device (or manually enter your security code) each time you start IB Gateway using your live login. Also, be sure to read the section about IB Gateway auto-restarts which outlines the need to perform two-factor authentication weekly on Sundays.

A security card is not required for paper accounts, so you can enjoy full automation by using your paper account, even if your live account requires a security card for login.

Enter IBKR login

To connect to your IBKR account, enter your IBKR login into your deployment, as well as the desired trading mode (live or paper). You'll be prompted for your password:

$ quantrocket ibg credentials 'ibg1' --username 'myuser' --paper

Enter IBKR Password:

status: successfully set ibg1 credentials

>>> from quantrocket.ibg import set_credentials

>>> set_credentials("ibg1", username="myuser", trading_mode="paper")

Enter IBKR Password:

{'status': 'successfully set ibg1 credentials'}

$ curl -X PUT 'http://houston/ibg1/credentials' -d 'username=myuser' -d 'password=mypassword' -d 'trading_mode=paper'

{"status": "successfully set ibg1 credentials"}

When setting your credentials, QuantRocket securely stores your credentials inside your deployment so you don't need to enter them again, then starts IB Gateway to verify that your credentials work. Starting IB Gateway takes approximately 30 seconds.

If you are connecting to a live IBKR account that requires second factor authentication, you will see an error message:

$ quantrocket ibg credentials 'ibg1' --username 'myuser' --live

Enter IBKR Password:

msg: Cannot start gateway because second factor authentication is required. Please

complete authentication on your mobile device or open the IB Gateway GUI to authenticate

manually. See http://qrok.it/h/ib2fa for help.

status: error

>>> from quantrocket.ibg import set_credentials

>>> set_credentials("ibg1", username="myuser", trading_mode="live")

Enter IBKR Password:

HTTPError: ('401 Client Error: UNAUTHORIZED for url: http://houston/ibg1/credentials', {'status': 'error', 'msg': 'Cannot start gateway because second factor authentication is required. Please complete authentication on your mobile device or open the IB Gateway GUI to authenticate manually. See http://qrok.it/h/ib2fa for help.'})

$ curl -X PUT 'http://houston/ibg1/credentials' -d 'username=myuser' -d 'password=mypassword' -d 'trading_mode=live'

{"status": "error", "msg": "Cannot start gateway because second factor authentication is required. Please complete authentication on your mobile device or open the IB Gateway GUI to authenticate manually. See http://qrok.it/h/ib2fa for help."}

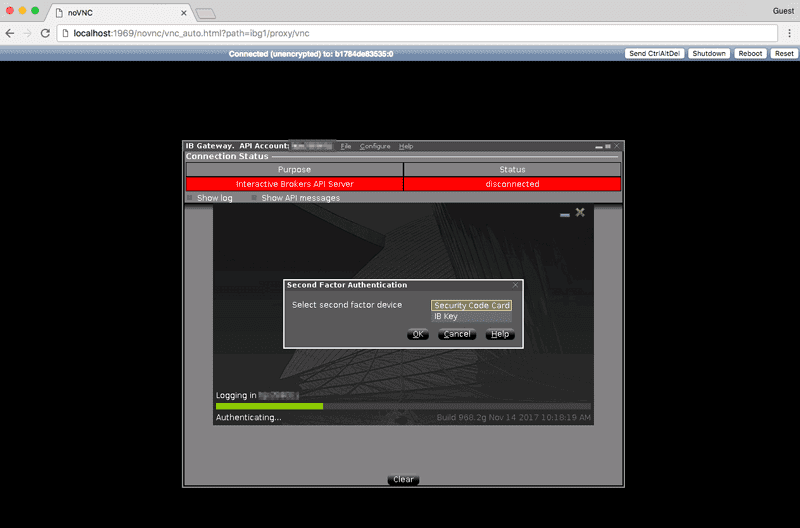

Complete the authentication using your mobile device, or open the IB Gateway GUI to enter your security code or complete the login manually.

To quit the GUI session but leave IB Gateway running, simply close your browser tab.

If you encounter errors trying to start IB Gateway, refer to a later section to learn how to

access the IB Gateway GUI for troubleshooting.

Querying your IBKR account balance is a good way to verify your IBKR connection:

$ quantrocket account balance --latest --fields 'NetLiquidation' | csvlook

| Account | Currency | NetLiquidation | LastUpdated |

| --------- | -------- | -------------- | ------------------- |

| DU12345 | USD | 500,000.00 | 2020-02-02 22:57:13 |

>>> from quantrocket.account import download_account_balances

>>> import io

>>> import pandas as pd

>>> f = io.StringIO()

>>> download_account_balances(f, latest=True, fields=["NetLiquidation"])

>>> balances = pd.read_csv(f, parse_dates=["LastUpdated"])

>>> balances.head()

Account Currency NetLiquidation LastUpdated

0 DU12345 USD 500000.0 2020-02-02 22:57:13

$ curl 'http://houston/account/balances.csv?latest=true&fields=NetLiquidation'

Account,Currency,NetLiquidation,LastUpdated

DU12345,USD,500000.0,2020-02-02 22:57:13

Switch between live and paper account

When you sign up for an IBKR paper account, IBKR provides login credentials for the paper account. However, it is also possible to login to the paper account by using your live account credentials and specifying the trading mode as "paper". Thus, technically the paper login credentials are unnecessary.

Using your live login credentials for both live and paper trading allows you to easily switch back and forth. Supposing you originally select the paper trading mode:

$ quantrocket ibg credentials 'ibg1' --username 'myliveuser' --paper

Enter IBKR Password:

status: successfully set ibg1 credentials

>>> from quantrocket.ibg import set_credentials

>>> set_credentials("ibg1", username="myliveuser", trading_mode="paper")

Enter IBKR Password:

{'status': 'successfully set ibg1 credentials'}

$ curl -X PUT 'http://houston/ibg1/credentials' -d 'username=myliveuser' -d 'password=mypassword' -d 'trading_mode=paper'

{"status": "successfully set ibg1 credentials"}

You can later switch to live trading mode without re-entering your credentials:

$ quantrocket ibg credentials 'ibg1' --live

status: successfully set ibg1 credentials

>>> set_credentials("ibg1", trading_mode="live")

{'status': 'successfully set ibg1 credentials'}

$ curl -X PUT 'http://houston/ibg1/credentials' -d 'trading_mode=live'

{"status": "successfully set ibg1 credentials"}

If you forget which mode you're in (or which login you used), you can check:

$ quantrocket ibg credentials 'ibg1'

TRADING_MODE: live

TWSUSERID: myliveuser

>>> from quantrocket.ibg import get_credentials

>>> get_credentials("ibg1")

{'TWSUSERID': 'myliveuser', 'TRADING_MODE': 'live'}

$ curl -X GET 'http://houston/ibg1/credentials'

{"TWSUSERID": "myliveuser", "TRADING_MODE": "live"}

Start/stop IB Gateway

IB Gateway must be running whenever you want to collect market data or place or monitor orders. You can optionally stop IB Gateway when you're not using it. Interactive Brokers limits each unique IBKR login to one IB Gateway or Trader Workstation session at a time. Therefore, if you need to log in to Trader Workstation using the same login credentials you are using with QuantRocket, you must first stop IB Gateway.

To check the current status of your IB Gateway(s):

$ quantrocket ibg status

ibg1: stopped

>>> from quantrocket.ibg import list_gateway_statuses

>>> list_gateway_statuses()

{'ibg1': 'stopped'}

$ curl -X GET 'http://houston/ibgrouter/gateways'

{"ibg1": "stopped"}

You can start IB Gateway, optionally waiting for the startup process to complete:

$ quantrocket ibg start --wait

ibg1:

status: running

>>> from quantrocket.ibg import start_gateways

>>> start_gateways(wait=True)

{'ibg1': {'status': 'running'}}

$ curl -X POST 'http://houston/ibgrouter/gateways?wait=True'

{"ibg1": {"status": "running"}}

And later stop it:

$ quantrocket ibg stop --wait

ibg1:

status: stopped

>>> from quantrocket.ibg import stop_gateways

>>> stop_gateways(wait=True)

{'ibg1': {'status': 'stopped'}}

$ curl -X DELETE 'http://houston/ibgrouter/gateways?wait=True'

{"ibg1": {"status": "stopped"}}

IB Gateway Auto-Restart

IB Gateway automatically restarts itself once a day. This behavior is enforced by IB Gateway itself, not QuantRocket, and is designed to keep IB Gateway running smoothly.

The daily restart happens at 11:45 PM New York time. The restart takes about 30 seconds. If historical or fundamental data collection is in progress, the data collection services will detect the interrupted connection and automatically resume when the connection is restored. (If IB Gateway is not running at the time of the restart, no restart is required or occurs.)

If you need the restart to occur at a different time (for example because your strategy may be placing trades at 11:45 PM New York time), you can modify the restart time by opening the IB Gateway GUI and navigating to Configure > Settings > Lock and Exit. Each time you re-deploy QuantRocket or run a software update which creates or re-creates the ibg container, you will need to edit the setting again.

Auto-restart with two-factor authentication

For accounts with two-factor authentication enabled, you must manually stop and start IB Gateway each Sunday and perform two-factor authentication. On other days, the auto-restart will automatically log you back in without the need to perform two-factor authentication. Thus, accounts with two-factor authentication can remain logged in all week, with manual login on Sundays.

To avoid confusion, note that, if IB Gateway is running on Saturday, the Saturday night auto-restart will successfully log you back in, and IB Gateway will be running on Sunday morning. However, you still must manually stop and start IB Gateway and re-authenticate at some point on Sunday, otherwise the next auto-restart (on Sunday night) will fail. This is because IB Gateway internally uses a re-authentication token to allow the application to auto-restart throughout the week without manual re-authentication, but the token expires Saturday night and thus will fail on Sunday night unless you manually re-authenticate on Sunday, thereby obtaining a new re-authentication token that is good for another week.

IB Gateway GUI

Normally you won't need to access the IB Gateway GUI. However, you might need access to troubleshoot a login issue, or if you've enabled two-factor authentication for IB Gateway.

To allow access to the IB Gateway GUI, QuantRocket uses NoVNC, which uses the WebSockets protocol to support VNC connections in the browser. To open an IB Gateway GUI connection in your browser, click the "IB Gateway GUI" button located on the JupyterLab Launcher or from the File menu. The IB Gateway GUI will open in a new window (make sure your browser doesn't block the pop-up).

If IB Gateway isn't currently running, the screen will be black.

To quit the VNC session but leave IB Gateway running, simply close your browser tab.

For improved security for cloud deployments, QuantRocket doesn't directly expose any VNC ports to the outside. By proxying VNC connections through houston using NoVNC, such connections are protected by Basic Auth and SSL, just like every other request sent through houston.

Multiple IB Gateways

QuantRocket support running multiple IB Gateways, each associated with a particular IBKR login. Two of the main reasons for running multiple IB Gateways are:

- To trade multiple accounts

- To increase market data concurrency

The default IB Gateway service is called ibg1. To run multiple IB Gateways, create a file called docker-compose.override.yml in the same directory as your docker-compose.yml and add the desired number of additional services as shown below. In this example we are adding two additional IB Gateway services, ibg2 and ibg3, which inherit from the definition of ibg1:

version: '2.4'

services:

ibg2:

extends:

file: docker-compose.yml

service: ibg1

ibg3:

extends:

file: docker-compose.yml

service: ibg1

You can learn more about docker-compose.override.yml in another section.

Then, deploy the new service(s):

$ cd /path/to/docker-compose.yml

$ docker compose -p quantrocket up -d

You can then enter your login for each of the new IB Gateways:

$ quantrocket ibg credentials 'ibg2' --username 'myuser' --paper

Enter IBKR Password:

status: successfully set ibg2 credentials

>>> from quantrocket.ibg import set_credentials

>>> set_credentials("ibg2", username="myuser", trading_mode="paper")

Enter IBKR Password:

{'status': 'successfully set ibg2 credentials'}

$ curl -X PUT 'http://houston/ibg2/credentials' -d 'username=myuser' -d 'password=mypassword' -d 'trading_mode=paper'

{"status": "successfully set ibg2 credentials"}

When starting and stopping gateways, the default behavior is start or stop all gateways. To target specific gateways, use the gateways parameter:

$ quantrocket ibg start --gateways 'ibg2'

status: the gateways will be started asynchronously

>>> from quantrocket.ibg import start_gateways

>>> start_gateways(gateways=["ibg2"])

{'status': 'the gateways will be started asynchronously'}

$ curl -X POST 'http://houston/ibgrouter/gateways?gateways=ibg2'

{"status": "the gateways will be started asynchronously"}

Market data permission file

Generally, loading your market data permissions into QuantRocket is only necessary when you are running multiple IB Gateway services with different market data permissions for each.

To retrieve market data from IBKR, you must subscribe to the appropriate market data subscriptions in IBKR Client Portal. QuantRocket can't identify your subscriptions via API, so you must tell QuantRocket about your subscriptions by loading a YAML configuration file. If you don't load a configuration file, QuantRocket will assume you have market data permissions for any data you request through QuantRocket. If you only run one IB Gateway service, this is probably sufficient and you can skip the configuration file. However, if you run multiple IB Gateway services with separate market data permissions for each, you will probably want to load a configuration file so QuantRocket can route your requests to the appropriate IB Gateway service. You should also update your configuration file whenever you modify your market data permissions in IBKR Client Portal.

An example IB Gateway permissions template is available from the JupyterLab launcher.

QuantRocket looks for a market data permission file called quantrocket.ibg.permissions.yml in the top-level of the Jupyter file browser (that is, /codeload/quantrocket.ibg.permissions.yml). The format of the YAML file is shown below:

ibg1:

marketdata:

STK:

- NYSE

- ISLAND

- TSEJ

FUT:

- GLOBEX

- OSE

CASH:

- IDEALPRO

research:

- wsh

ibg2:

marketdata:

STK:

- NYSE

When you create or edit this file, QuantRocket will detect the change and load the configuration. It's a good idea to have flightlog open when you do this. If the configuration file is valid, you'll see a success message:

quantrocket.ibgrouter: INFO Successfully loaded /codeload/quantrocket.ibg.permissions.yml

If the configuration file is invalid, you'll see an error message:

quantrocket.ibgrouter: ERROR Could not load /codeload/quantrocket.ibg.permissions.yml:

quantrocket.ibgrouter: ERROR unknown key(s) for service ibg1: marketdata-typo

You can also dump out the currently loaded config to confirm it is as you expect:

$ quantrocket ibg config

ibg1:

marketdata:

CASH:

- IDEALPRO

FUT:

- GLOBEX

- OSE

STK:

- NYSE

- ISLAND

- TSEJ

research:

- reuters

- wsh

ibg2:

marketdata:

STK:

- NYSE

>>> from quantrocket.ibg import get_ibg_config

>>> get_ibg_config()

{

'ibg1': {

'marketdata': {

'CASH': [

'IDEALPRO'

],

'FUT': [

'GLOBEX',

'OSE'

],

'STK': [

'NYSE',

'ISLAND',

'TSEJ'

]

},

'research': [

'reuters',

'wsh'

]

},

'ibg2': {

'marketdata': {

'STK': [

'NYSE'

]

}

}

}

$ curl -X GET 'http://houston/ibgrouter/config'

{

"ibg1": {

"marketdata": {

"CASH": [

"IDEALPRO"

],

"FUT": [

"GLOBEX",

"OSE"

],

"STK": [

"NYSE",

"ISLAND",

"TSEJ"

]

},

"research": [

"reuters",

"wsh"

]

},

"ibg2": {

"marketdata": {

"STK": [

"NYSE"

]

}

}

}

IB Gateway log files

There are two types of logs produced by IB Gateway: API logs and Gateway logs. The API logs show the API messages being sent back and forth between QuantRocket and IB Gateway. The Gateway logs show detailed debugging logs for the IB Gateway application.

The API logs are occasionally useful for troubleshooting QuantRocket and might be requested by QuantRocket support. The Gateway logs might occasionally be requested by Interactive Brokers support. If you need to send these files to QuantRocket or Interactive Brokers support for troubleshooting, you can generate and export the files as described below.

API logs

You can use the IB Gateway GUI to generate API logs, then export the logs to the Docker filesystem, then copy them to your local filesystem.

- With IB Gateway running, open the GUI.

- In the IB Gateway GUI, click Configure > Settings, navigate to API > Settings and check the box for "Create API message log file."

- IB Gateway will begin to generate API logs. Continue using the application until the messages you are interested in have been generated.

- Next, in the IB Gateway GUI, click File > API Logs, and select the day you're interested in.

- Click Export Logs or Export Today Logs. A file browser will open, showing the filesystem inside the Docker container.

- Export the log file to an easy-to-find location such as

/tmp/api-exported-logs.txt. - From the host machine, copy the exported logs from the Docker container to your local filesystem. For

ibg1 logs saved to the above location, the command would be:

$ docker cp quantrocket_ibg1_1:/tmp/api-exported-logs.txt api-exported-logs.txt

After exporting the API logs, open IB Gateway GUI again and uncheck the box for "Create API message log file."

Gateway logs

You can use the IB Gateway GUI to export the Gateway logs to the Docker filesystem, then copy them to your local filesystem.

- With IB Gateway running, open the GUI.

- In the IB Gateway GUI, click File > Gateway Logs, and select the day you're interested in.

- Click Export Logs or Export Today Logs. A file browser will open, showing the filesystem inside the Docker container.

- Export the log file to an easy-to-find location such as

/tmp/ibgateway-exported-logs.txt. - From the host machine, copy the exported logs from the Docker container to your local filesystem. For

ibg1 logs saved to the above location, the command would be:

$ docker cp quantrocket_ibg1_1:/tmp/ibgateway-exported-logs.txt ibgateway-exported-logs.txt

Alpaca

Your credentials are encrypted at rest and never leave your deployment.

You can connect to one or more paper Alpaca accounts and one or more live Alpaca accounts. Enter your API key and trading mode for each account you want to connect (you will be prompted for your secret key):

$ quantrocket license alpaca-key --api-key 'PXXXXXXXXXXXXXXXXXX' --paper

Enter Alpaca secret key:

status: successfully set Alpaca paper API key

>>> from quantrocket.license import set_alpaca_key

>>> set_alpaca_key(api_key="PXXXXXXXXXXXXXXXXXX", trading_mode="paper")

Enter Alpaca secret key:

{'status': 'successfully set Alpaca paper API key'}

$ curl -X PUT 'http://houston/license-service/credentials/alpaca' -d 'api_key=PXXXXXXXXXXXXXXXXXX&secret_key=XXXXXXXXXXXXXXXXXX&trading_mode=paper'

{"status": "successfully set Alpaca paper API key"}

If you plan to use Alpaca for real-time data and subscribe to Alpaca's unlimited data package which provides access to the full SIP data feed, you can indicate this by including the --realtime-data/realtime_data parameter and specifying 'sip' (if omitted, only Alpaca's free IEX data permission is assumed):

$ quantrocket license alpaca-key --api-key 'XXXXXXXXXXXXXXXXXX' --live --realtime-data 'sip'

Enter Alpaca secret key:

status: successfully set Alpaca live API key

>>> set_alpaca_key(api_key="XXXXXXXXXXXXXXXXXX", trading_mode="live", realtime_data="sip")

Enter Alpaca secret key:

{'status': 'successfully set Alpaca live API key'}

$ curl -X PUT 'http://houston/license-service/credentials/alpaca' -d 'api_key=XXXXXXXXXXXXXXXXXX&secret_key=XXXXXXXXXXXXXXXXXX&trading_mode=live&realtime_data=sip'

{"status": "successfully set Alpaca live API key"}

You can view the currently configured API keys, which are organized by account number:

$ quantrocket license alpaca-key

12345678:

api_key: XXXXXXXXXXXXXXXXXX

realtime_data: sip

trading_mode: live

P1234567:

api_key: PXXXXXXXXXXXXXXXXXX

realtime_data: iex

trading_mode: paper

>>> from quantrocket.license import get_alpaca_key

>>> get_alpaca_key()

{'12345678': {'api_key': 'XXXXXXXXXXXXXXXXXX', 'realtime_data': 'sip', 'trading_mode': 'live'},

'P1234567': {'api_key': 'PXXXXXXXXXXXXXXXXXX', 'realtime_data': 'iex','trading_mode': 'paper'}}

$ curl -X GET 'http://houston/license-service/credentials/alpaca'

{"12345678": {"api_key": "XXXXXXXXXXXXXXXXXX", 'realtime_data': 'sip',"trading_mode": "live"}, "P1234567": {"api_key": "PXXXXXXXXXXXXXXXXXX", 'realtime_data': 'iex', "trading_mode": "paper"}}

To later change your real-time data permission, simply re-enter the credentials with the new permission:

$ quantrocket license alpaca-key --api-key 'XXXXXXXXXXXXXXXXXX' --live --realtime-data 'iex'

Enter Alpaca secret key:

status: successfully set Alpaca live API key

>>> set_alpaca_key(api_key="XXXXXXXXXXXXXXXXXX", trading_mode="live", realtime_data="iex")

Enter Alpaca secret key:

{'status': 'successfully set Alpaca live API key'}

$ curl -X PUT 'http://houston/license-service/credentials/alpaca' -d 'api_key=XXXXXXXXXXXXXXXXXX&secret_key=XXXXXXXXXXXXXXXXXX&trading_mode=live&realtime_data=iex'

{"status": "successfully set Alpaca live API key"}

Polygon.io

Your credentials are encrypted at rest and never leave your deployment.

To enable access to Polygon.io data, enter your Polygon.io API key:

$ quantrocket license polygon-key 'XXXXXXXXXXXXXXXXXX'

status: successfully set Polygon API key

>>> from quantrocket.license import set_polygon_key

>>> set_polygon_key(api_key="XXXXXXXXXXXXXXXXXX")

{'status': 'successfully set Polygon API key'}

$ curl -X PUT 'http://houston/license-service/credentials/polygon' -d 'api_key=XXXXXXXXXXXXXXXXXX'

{"status": "successfully set Polygon API key"}

You can view the currently configured API key:

$ quantrocket license polygon-key

api_key: XXXXXXXXXXXXXXXXXX

>>> from quantrocket.license import get_polygon_key

>>> get_polygon_key()

{'api_key': 'XXXXXXXXXXXXXXXXXX'}

curl -X GET 'http://houston/license-service/credentials/polygon'

{"api_key": "XXXXXXXXXXXXXXXXXX"}

Nasdaq Data Link (Quandl)

Nasdaq acquired Quandl in 2018 and rebranded Quandl as Nasdaq Data Link in 2021. However, QuantRocket APIs reflect the original Quandl branding.

Your credentials are encrypted at rest and never leave your deployment.

Users who subscribe to Sharadar data through Nasdaq Data Link (formerly Quandl) can access Sharadar data in QuantRocket. To enable access, enter your Nasdaq/Quandl API key:

$ quantrocket license quandl-key 'XXXXXXXXXXXXXXXXXX'

status: successfully set Quandl API key

>>> from quantrocket.license import set_quandl_key

>>> set_quandl_key(api_key="XXXXXXXXXXXXXXXXXX")

{'status': 'successfully set Quandl API key'}

$ curl -X PUT 'http://houston/license-service/credentials/quandl' -d 'api_key=XXXXXXXXXXXXXXXXXX'

{"status": "successfully set Quandl API key"}

You can view the currently configured API key:

$ quantrocket license quandl-key

api_key: XXXXXXXXXXXXXXXXXX

>>> from quantrocket.license import get_quandl_key

>>> get_quandl_key()

{'api_key': 'XXXXXXXXXXXXXXXXXX'}

curl -X GET 'http://houston/license-service/credentials/quandl'

{"api_key": "XXXXXXXXXXXXXXXXXX"}

IDEs and Editors

QuantRocket allows you to work in several different IDEs (integrated development environments) and editors.

Comparison

A summary comparison of the availables IDEs and editors is shown below:

| | JupyterLab | Eclipse Theia | VS Code |

|---|

| ideal for | interactive research | code editing from any computer | desktop code editing |

| runs in | browser | browser | desktop |

| supports Jupyter Notebooks? | yes | no | experimental |

| supports Terminals? | yes | no | yes |

| setup required? | no | no | yes |

JupyterLab is the primary user interface for QuantRocket. It is an ideal environment for interactive research. You can access QuantRocket's Python API through JupyterLab Consoles and Notebooks, and you can access QuantRocket's command line interface (CLI) through JupyterLab Terminals.

A limitation of JupyterLab is that its text editor is very basic, providing syntax highlighting but not much more. For a better code editing experience, you can use Eclipse Theia or Visual Studio Code.

Eclipse Theia and VS Code have similar user interfaces, so what are the differences? Eclipse Theia runs in the browser and requires no setup; thus you can edit your code from any computer. Theia provides syntax highlighting, auto-completion, linting, and a Git integration. Other features such as terminals are disabled.

VS Code runs on your desktop and requires some basic setup, but offers a fuller-featured editing experience. We suggest using VS Code on your main workstation and using Eclipse Theia when on-the-go.

JupyterLab

See the QuickStart for a hands-on overview of JupyterLab.

A recommended workflow for Moonshot strategies and custom scripts is to develop your code interactively in a Jupyter notebook then transfer it to a .py file.

Eclipse Theia

Access Eclipse Theia from the JupyterLab launcher:

Visual Studio Code

You can install Visual Studio Code on your desktop and attach it to your local or cloud deployment. This allows you to edit code and open terminals from within VS Code. VS Code utilizes the environment provided by the QuantRocket container you attach to, so autocomplete and other features are based on the QuantRocket environment, meaning there's no need to manually replicate QuantRocket's environment on your local computer.

Follow these steps to use VS Code with QuantRocket.

- First, download and install VS Code for your operating system.

- In VS Code, open the extension manager and install the following extensions:

- Python

- Docker

- Remote - Containers

- For cloud deployments only: By default, VS Code will be able to see any Docker containers running on your local machine. To make VS Code see your QuantRocket containers running remotely in the cloud, run

docker context use cloud, just as you would to deploy QuantRocket to the cloud. This command points Docker to the remote host where you are running QuantRocket and causes VS Code to see the containers running remotely. (Alternatively, you can change the Docker context from the Contexts section of the Docker panel in VS Code.) - Open the Docker panel in the side bar, find the jupyter container, right-click, and choose "Attach Visual Studio Code". A new window opens.

- (The original VS Code window still points to your local computer and can be used to edit your local projects.)

- The new VS Code window that opened is attached to the jupyter container. VS code will automatically install itself on the jupyter container.

- Any extensions you may have installed on your local VS Code are not automatically installed on the remote VS Code, so you should install them. Open the Extensions Manager and install, at minimum, the Python extension, and anything else you like. VS Code remembers what you install in a local configuration file and restores your desired environment in the future even if you destroy and re-create the container.

- In the Explorer window, click Open Folder, type 'codeload', then Open Folder. The files on your jupyter container will now be displayed in the VS Code file browser.

Jupyter notebooks in VS Code

Support for running Jupyter notebooks in VS Code is experimental. If you encounter problems starting notebooks in VS Code, please use JupyterLab instead.

If you wish to use Jupyter notebooks in VS Code, follow these steps:

- Open the command palette (View > Command Palette) and search for and select the command called:

Python: Specify Local or Remote Jupyter Server for connections. - On the next menu, select

Existing: Specify the URI of an existing server. - Enter the following URL:

http://localhost/jupyter (this applies both to local and cloud deployments) - Reload the VS Code window if prompted to do so.

- Open an existing Jupyter notebook. (Creating notebooks from within VS Code may or may not work.)

- The first time you execute a cell, VS Code will prompt for a password. Simply hit enter. (No password is needed as you are already inside jupyter and simply connecting to localhost.)

Terminal utilities

.bashrc

You can customize your JupyterLab Terminals by creating a .bashrc file and storing it at /codeload/.bashrc. This file will be run when you open a new terminal, just like on a standard Linux distribution.

An example use is to create aliases for commonly typed commands. For example, placing the following alias in your /codeload/.bashrc file will allow you to check your balance by simply typing balance:

alias balance="quantrocket account balance -l -f NetLiquidation | csvlook"

After adding or editing a .bashrc file, you must open new Terminals for the changes to take effect.

csvkit

Many QuantRocket API endpoints return CSV files. csvkit is a suite of utilities that makes it easier to work with CSV files from the command line. To make a CSV file more easily readable, use csvlook:

$ quantrocket master get --exchanges 'XNAS' 'XNYS' | csvlook -I

| Sid | Symbol | Exchange | Country | Currency | SecType | Etf | Timezone | Name |

| -------------- | ------ | -------- | ------- | -------- | ------- | --- | ------------------- | -------------------------- |

| FIBBG000B9XRY4 | AAPL | XNAS | US | USD | STK | 0 | America/New_York | APPLE INC |

| FIBBG000BFWKC0 | MON | XNYS | US | USD | STK | 0 | America/New_York | MONSANTO CO |

| FIBBG000BKZB36 | HD | XNYS | US | USD | STK | 0 | America/New_York | HOME DEPOT INC |

| FIBBG000BMHYD1 | JNJ | XNYS | US | USD | STK | 0 | America/New_York | JOHNSON & JOHNSON |

Another useful utility is csvgrep, which can be used to filter CSV files on fields not natively filterable by QuantRocket's API:

$

$ quantrocket master get --exchanges 'XNYS' --fields 'usstock_SecurityType2' | csvgrep --columns 'usstock_SecurityType2' --match 'Depositary Receipt' > nyse_adrs.csv

json2yml

For records which are too wide for the Terminal viewing area in CSV format, a convenient option is to request JSON and convert it to YAML using the json2yml utility:

$ quantrocket master get --symbols 'AAPL' --json | json2yml

-

Sid: "FIBBG000B9XRY4"

Symbol: "AAPL"

Exchange: "XNAS"

Country: "US"

Currency: "USD"

SecType: "STK"

Etf: 0

Timezone: "America/New_York"

Name: "APPLE INC"

PriceMagnifier: 1

Multiplier: 1

Delisted: 0

DateDelisted: null

LastTradeDate: null

RolloverDate: null

Custom JupyterLab environments

Follow these steps to create a custom conda environment and make it available as a custom kernel from the JupyterLab launcher.

This is an advanced topic. Most users will not need to do this.

Keep in mind that QuantRocket has a distributed architecture and these steps will only create the custom environment within the jupyter container, not in other containers where user code may run, such as the moonshot, zipline, and satellite containers.

First-time install

First, in a JupyterLab terminal, initialize your bash shell then exit the terminal:

$ conda init 'bash'

$ exit

Open a new JupyterLab terminal, then clone the base environment and activate your new environment:

$ conda create --name 'myclone' --clone 'base'

$ conda activate 'myclone'

Install new packages to customize your conda environment. For easier repeatability, list your packages in a text file in the /codeload directory and install the packages from file. One of the packages should be ipykernel:

$ (myclone) $ echo 'ipykernel' > /codeload/quantrocket.jupyter.conda.myclone.txt

$ (myclone) $

$ (myclone) $ conda install --file '/codeload/quantrocket.jupyter.conda.myclone.txt'

Next, create a new kernel spec associated with your custom conda environment. For easier repeatability, create the kernel spec under the /codeload directory instead of directly in the default location:

$ (myclone) $

$ (myclone) $ ipython kernel install --name 'mykernel' --display-name 'My Custom Kernel' --prefix '/codeload/kernels'

Install the kernel. This command copies the kernel spec to a location where JupyterLab looks:

$ (myclone) $ jupyter kernelspec install '/codeload/kernels/share/jupyter/kernels/mykernel'

Finally, to activate the change, open Terminal (MacOS/Linux) or PowerShell (Windows) and restart the jupyter container:

$ docker compose restart jupyter

The new kernel will appear in the Launcher menu:

Re-install after container redeploy

Whenever you redeploy the jupyter container (either due to updating the container version or force recreating the container), the filesystem is replaced and thus your custom conda environment and JupyterLab kernel will be lost. The re-install process can omit a few steps because you saved the conda package file and kernel spec to your /codeload directory. The simplified process is as follows. Initialize your shell:

$ conda init 'bash'

$ exit

Reopen a terminal, then:

$

$ conda create --name 'myclone' --clone 'base'

$ conda activate 'myclone'

$ (myclone) $

$ (myclone) $ conda install --file '/codeload/quantrocket.jupyter.conda.myclone.txt'

$ (myclone) $

$ (myclone) $ jupyter kernelspec install '/codeload/kernels/share/jupyter/kernels/mykernel'

Then, restart the jupyter container to activate the change:

$ docker compose restart jupyter

Teams

Teams with a multi-user license can run more than one QuantRocket deployment. Because QuantRocket's primary user interface is JupyterLab, which is not designed to be a multi-user environment, teams should run a separate deployment for each user. The recommended deployment strategy is to run a primary deployment for third-party data collection and live trading, and one or more research deployments for research and backtesting.

| | Deployed to | How many | Connects to Brokers and Data Providers | Used for | Used by |

|---|

| Primary deployment | Cloud | 1 | Yes | Third-party data collection, live trading | Team owner or administrator |

| Research deployment(s) | Cloud or local | 1 or more | No | Research and backtesting | Quant researchers |

Cloud vs local

QuantRocket can either be installed locally or in the cloud. In the context of teams, the main tradeoff between cloud and local is cost vs control. Local deployments allow team members to utilize their existing workstations, saving on cloud costs. However, cloud deployments offer the team owner additional control and auditing by providing access to the team member's work environment.

The installation process also differs for cloud vs local deployments. For cloud deployments, the team owner or administrator installs Docker and deploys Quantrocket to the cloud, then provides the team member with login credentials to access the deployment. For local deployments, each team member installs Docker and deploys QuantRocket on his or her own machine.

A summary is shown below:

| | Who performs installation | Incurs cloud costs | Easy to audit |

|---|

| Cloud | Team owner/administrator | yes | yes |

| Local | Researcher | no | no |

Multiple cloud deployments

A team owner or administrator can deploy QuantRocket to multiple cloud servers from the administrator's own workstation. This provides a central place to manage multiple deployments.

To install multiple cloud deployments, follow the cloud installation tutorial, but observe the following modifications.

Unique deployment names

Wherever the tutorial uses the name quantrocket or cloud, you should instead choose a unique name for each deployment, for example quantrocket1, quantrocket2, etc. Apply the unique names in the following contexts:

| | Single cloud deployment | Multiple cloud deployments |

|---|

| Docker Context name | cloud | cloud1, cloud2, etc. |

| Domain name | quantrocket.abc-capital.com | quantrocket1.abc-capital.com, quantrocket2.abc-capital.com, etc. |

| Local folder containing Compose file | ~/quantrocket | ~/quantrocket1, ~/quantrocket2, etc. |

(The names quantrocket1 etc. are only examples; you are free to choose different names.)

The following commands show how you would bring up two deployments by navigating to the appropriate local folder and specifying the corresponding Docker Context:

$

$ cd ~/quantrocket1

$ docker compose --context cloud1 up -d

$

$

$ cd ~/quantrocket2

$ docker compose --context cloud2 up -d

Unique Houston environment variables

The Houston domain, username, and password determine the URL and credentials your team members will use to log in to their cloud deployments. The installation tutorial suggests setting environment variables for your deployment's domain, username, and password. However, this approach is not as suitable when you need to set up multiple deployments with different variables for each.

Instead, the recommended approach for team administrators is to create a docker-compose.override.yml file in each of the local folders containing the Compose files (~/quantrocket1, ~/quantrocket2, etc.) and set the Houston variables directly in the override file. Each docker-compose.override.yml should look similar to the following, with the appropriate variables for each deployment:

version: '2.4'

services:

houston:

environment:

BASIC_AUTH_USER: 'usernameyourteammemberwilluse'

BASIC_AUTH_PASSWD: 'passwordyourteammemberwilluse'

LETSENCRYPT_DOMAIN: 'quantrocket1.abc-capital.com'

Software activation

After deploying QuantRocket, the team administrator should access JupyterLab and enter the license key. (For security reasons, don't give the license key to your team members to enter themselves; see the section below for more on license key sharing.)

Team member access

Finally, provide your team members with the cloud deployment URL and login credentials you have established for them.

License key sharing

Sharing your license key with team members requires care because team members may leave your organization. An ex-team member with your license key could utilize one of your license seats for their own use, thus reducing the seats available for you. There are 3 options for securely sharing your license with team members.

Option 1: Administer cloud deployments

If you set up cloud deployments for your team members and enter your license key into each cloud deployment yourself, there is no security risk. The license key is encrypted at rest and is obfuscated in the display output (for example YXV0........ABCD), so your team members will not have access to your full license key.

Option 2: Share and rotate

If your team members run QuantRocket locally on their own machines, you can share your license key with them, then whenever a team member leaves your organization, you can rotate your license key and distribute the new license key to your remaining team members.

Option 3: Link license keys

A third option is to instruct your team members to create their own QuantRocket accounts and link their accounts to yours. This allows the team members to activate the software by entering their own license key, rather than yours. The license profile output will display the team member's own license key, the team owner's email to which they are linked, and the team's software license:

$ quantrocket license get

licensekey: XXXX....XXXX

software_license:

account:

account_limit: XXXXXX USD

concurrent_install_limit: 4

license_type: Professional

user_limit: 3

team: team-owner@abc-capital.com

>>> from quantrocket.license import get_license_profile

>>> get_license_profile()

{'licensekey': 'XXXX....XXXX',

'software_license': {'license_type': 'Professional',

'user_limit': 3,

'concurrent_install_limit': 4,

'account': {'account_limit': 'XXXXXX USD'}},

'team': 'team-owner@abc-capital.com'}

$ curl -X GET 'http://houston/license-service/license'

{"licensekey": "XXXX....XXXX", "software_license": {"license_type": "Professional", "user_limit": 3, "concurrent_install_limit": 4, "account": {"account_limit": "XXXXXX USD"}}, "team": "team-owner@abc-capital.com"}

To link your team members to your account, follow these steps:

- Instruct each team member to register for their own QuantRocket account and generate their own license key.

- Open a support ticket and provide the emails your team members registered under. We will link their accounts to yours.

- Instruct your team members to enter their own license key into the software.

Data sharing

If your team members need access to third-party data such as data from your broker, the recommended approach is to collect the data on the primary deployment, push it to Amazon S3, then pull it from S3 onto the research deployments. That way, you only need to enable third-party API access on the primary deployment. This is not only a better security practice but is also necessary for third-party APIs such as IB Gateway which limit you to one concurrent connection.

For the primary deployment, create IAM credentials with read/write access to your S3 bucket. For the research deployments, you can create separate IAM credentials with read permission only. This ensures a one-way flow of data from the primary deployment to the research deployments.

See the Database Management section for more details on connecting to S3.

Code sharing

You can setup Git repositories to enable sharing of code and notebooks between team members, with access control managed directly on the Git repositories. See the Code Management section for more details on cloning from Git and pushing to Git.

Auditing

Team owners who need the ability to monitor their team members' activities should set up cloud deployments for their team members rather than having the team members run QuantRocket locally. To audit a cloud deployment, the team owner can simply log in to the deployment and review the code and notebooks or download the log files.

Securities Master

The securities master is the central repository of available assets. With QuantRocket's securities master, you can:

- Collect lists of all available securities from multiple data providers;

- Query reference data about securities, such as ticker symbol, currency, exchange, sector, expiration date (in the case of derivatives), and so on;

- Flexibly group securities into universes that make sense for your research or trading strategies.

QuantRocket assigns each security a unique ID known as its "Sid" (short for "security ID"). Sids allow securities to be uniquely and consistently referenced over time regardless of ticker changes or ticker symbol inconsistencies between vendors. Sids make it possible to mix-and-match data from different providers. QuantRocket Sids are primarily based on Bloomberg-sponsored OpenFIGI identifiers.

All components of the software, from historical and fundamental data collection to order and execution tracking, utilize Sids and thus depend on the securities master.

Collect listings

Generally, the first step before utilizing any dataset or sending orders to any broker is to collect the list of available securities for that provider.

Note on terminology: In QuantRocket, "collecting" data means retrieving it from a third-party or from the QuantRocket cloud and storing it in a local database. Once data has been collected, you can "download" it, which means to query the stored data from your local database for use in your analysis or trading strategies.

Because QuantRocket supports multiple data vendors and brokers, you may collect the same listing (for example AAPL stock) from multiple providers. QuantRocket will consolidate the overlapping records into a single, combined record, as explained in more detail below.

Alpaca

Alpaca customers should collect Alpaca's list of available securities before they begin live or paper trading:

$ quantrocket master collect-alpaca

msg: successfully loaded alpaca securities

status: success

>>> from quantrocket.master import collect_alpaca_listings

>>> collect_alpaca_listings()

{'status': 'success', 'msg': 'successfully loaded alpaca securities'}

$ curl -X POST 'http://houston/master/securities/alpaca'

{"status": "success", "msg": "successfully loaded alpaca securities"}

An example Alpaca record for AAPL is shown below:

Sid: "FIBBG000B9XRY4"

alpaca_AssetClass: "us_equity"

alpaca_AssetId: "b0b6dd9d-8b9b-48a9-ba46-b9d54906e415"

alpaca_EasyToBorrow: 1

alpaca_Exchange: "NASDAQ"

alpaca_Marginable: 1

alpaca_Name: null

alpaca_Shortable: 1

alpaca_Status: "active"

alpaca_Symbol: "AAPL"

alpaca_Tradable: 1

EDI

EDI listings are automatically collected when you collect EDI historical data, but they can also be collected separately. Specify one or MICs (market identifier codes):

$ quantrocket master collect-edi --exchanges 'XSHG' 'XSHE'

exchanges:

XSHE: successfully loaded XSHE securities

XSHG: successfully loaded XSHG securities

status: success

>>> from quantrocket.master import collect_edi_listings

>>> collect_edi_listings(exchanges=["XSHG", "XSHE"])

{'status': 'success',

'exchanges': {'XSHG': 'successfully loaded XSHG securities', 'XSHE': 'successfully loaded XSHE securities'}}

$ curl -X POST 'http://houston/master/securities/edi?exchanges=XSHG&exchanges=XSHE'

{"status": "success", "exchanges": {"XSHG": "successfully loaded XSHG securities", "XSHE": "successfully loaded XSHE securities"}}

For sample data, use the MIC code FREE.

An example EDI record for AAPL is shown below:

Sid: "FIBBG000B9XRY4"

edi_Cik: 320193

edi_CountryInc: "United States of America"

edi_CountryListed: "United States of America"

edi_Currency: "USD"

edi_DateDelisted: null

edi_ExchangeListingStatus: "Listed"

edi_FirstPriceDate: "2007-01-03"

edi_GlobalListingStatus: "Active"

edi_Industry: "Information Technology"

edi_IsPrimaryListing: 1

edi_IsoCountryInc: "US"

edi_IsoCountryListed: "US"

edi_IssuerId: 30017

edi_IssuerName: "Apple Inc"

edi_LastPriceDate: null

edi_LocalSymbol: "AAPL"

edi_Mic: "XNAS"

edi_MicSegment: "XNGS"

edi_MicTimezone: "America/New_York"

edi_PreferredName: "Apple Inc"

edi_PrimaryMic: "XNAS"

edi_RecordCreated: "2001-05-05"

edi_RecordModified: "2020-02-10 13:17:27"

edi_SecId: 33449

edi_SecTypeCode: "EQS"

edi_SecTypeDesc: "Equity Shares"

edi_SecurityDesc: "Ordinary Shares"

edi_Sic: "Electronic Computers"

edi_SicCode: 3571

edi_SicDivision: "Manufacturing"

edi_SicIndustryGroup: "Computer And Office Equipment"

edi_SicMajorGroup: "Industrial And Commercial Machinery And Computer Equipment"

edi_StructureCode: null

edi_StructureDesc: null

Figi

QuantRocket Sids are based on FIGI identifiers. While the OpenFIGI API is primarily a way to map securities to FIGI identifiers, it also provides several useful security attributes including market sector, a detailed security type, and share class-level FIGI identifiers. You can collect FIGI fields for all available QuantRocket securities:

$ quantrocket master collect-figi

msg: successfully loaded FIGIs

status: success

>>> from quantrocket.master import collect_figi_listings

>>> collect_figi_listings()

{'status': 'success', 'msg': 'successfully loaded FIGIs'}

$ curl -X POST 'http://houston/master/securities/figi'

{"status": "success", "msg": "successfully loaded FIGIs"}

An example FIGI record for AAPL is shown below:

Sid: "FIBBG000B9XRY4"

figi_CompositeFigi: "BBG000B9XRY4"

figi_ExchCode: "US"

figi_Figi: "BBG000B9XRY4"

figi_IsComposite: 1

figi_MarketSector: "Equity"

figi_Name: "APPLE INC"

figi_SecurityDescription: "AAPL"

figi_SecurityType: "Common Stock"

figi_SecurityType2: "Common Stock"

figi_ShareClassFigi: "BBG001S5N8V8"

figi_Ticker: "AAPL"

figi_UniqueId: "EQ0010169500001000"

figi_UniqueIdFutOpt: null

Interactive Brokers

Interactive Brokers can be utilized both as a data provider and a broker. First, decide which exchange(s) you want to work with. You can view exchange listings on the IBKR website or use QuantRocket to summarize the IBKR website by security type:

$ quantrocket master list-ibkr-exchanges --regions 'asia' --sec-types 'STK'

STK:

Australia:

- ASX

- CHIXAU

Hong Kong:

- SEHK

- SEHKNTL

- SEHKSZSE

India:

- NSE

Japan:

- CHIXJ

- JPNNEXT

- TSEJ

Singapore:

- SGX

>>> from quantrocket.master import list_ibkr_exchanges

>>> list_ibkr_exchanges(regions=["asia"], sec_types=["STK"])

{'STK': {'Australia': ['ASX', 'CHIXAU'],

'Hong Kong': ['SEHK', 'SEHKNTL', 'SEHKSZSE'],

'India': ['NSE'],

'Japan': ['CHIXJ', 'JPNNEXT', 'TSEJ'],

'Singapore': ['SGX']}}

$ curl 'http://houston/master/exchanges/ibkr?sec_types=STK®ions=asia'

{"STK": {"Australia": ["ASX", "CHIXAU"], "Hong Kong": ["SEHK", "SEHKNTL", "SEHKSZSE"], "India": ["NSE"], "Japan": ["CHIXJ", "JPNNEXT", "TSEJ"], "Singapore": ["SGX"]}}

Specify the IBKR exchange code (not the MIC) to collect all listings on the exchange, optionally filtering by security type, symbol, or currency. For example, this would collect all stock listings on the Hong Kong Stock Exchange:

$ quantrocket master collect-ibkr --exchanges 'SEHK' --sec-types 'STK'

status: the IBKR listing details will be collected asynchronously

>>> from quantrocket.master import collect_ibkr_listings

>>> collect_ibkr_listings(exchanges="SEHK", sec_types=["STK"])

{'status': 'the IBKR listing details will be collected asynchronously'}

$ curl -X POST 'http://houston/master/securities/ibkr?exchanges=SEHK&sec_types=STK'

{"status": "the IBKR listing details will be collected asynchronously"}

$ quantrocket flightlog stream --hist 5

quantrocket.master: INFO Collecting SEHK STK listings from IBKR website

quantrocket.master: INFO Requesting details for 2630 SEHK listings found on IBKR website

quantrocket.master: INFO Saved 2630 SEHK listings to securities master database

The number of listings collected from the IBKR website might be larger than the number of listings actually saved to the database. This is because the IBKR website lists all symbols that trade on a given exchange, even if the exchange is not the primary listing exchange. For example, the primary listing exchange for Alcoa (AA) is NYSE, but the IBKR website also lists Alcoa under the BATS exchange because Alcoa also trades on BATS (and many other US exchanges). QuantRocket saves Alcoa's contract details when you collect NYSE listings, not when you collect BATS listings

For futures, the number of contracts saved to the database will typically be larger than the number of listings found on the IBKR website because the website only lists underlyings but QuantRocket saves all available expiries for each underlying.

For free sample data, specify the exchange code FREE.

An example IBKR record for AAPL is shown below:

Sid: "FIBBG000B9XRY4"

ibkr_AggGroup: 1

ibkr_Category: "Computers"

ibkr_ComboLegs: null

ibkr_ConId: 265598

ibkr_ContractMonth: null

ibkr_Currency: "USD"

ibkr_Cusip: null

ibkr_DateDelisted: null

ibkr_Delisted: 0

ibkr_Etf: 0

ibkr_EvMultiplier: 0

ibkr_EvRule: null

ibkr_Industry: "Computers"

ibkr_Isin: "US0378331005"

ibkr_LastTradeDate: null

ibkr_LocalSymbol: "AAPL"

ibkr_LongName: "APPLE INC"

ibkr_MarketName: "NMS"

ibkr_MarketRuleIds: "26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26"

ibkr_MdSizeMultiplier: 100

ibkr_MinTick: 0.01

ibkr_Multiplier: null

ibkr_PriceMagnifier: 1

ibkr_PrimaryExchange: "NASDAQ"

ibkr_RealExpirationDate: null

ibkr_Right: null

ibkr_SecType: "STK"

ibkr_Sector: "Technology"

ibkr_Strike: 0

ibkr_Symbol: "AAPL"

ibkr_Timezone: "America/New_York"

ibkr_TradingClass: "NMS"

ibkr_UnderConId: 0

ibkr_UnderSecType: null

ibkr_UnderSymbol: null

ibkr_ValidExchanges: "SMART,AMEX,NYSE,CBOE,PHLX,ISE,CHX,ARCA,ISLAND,DRCTEDGE,BEX,BATS,EDGEA,CSFBALGO,JEFFALGO,BYX,IEX,EDGX,FOXRIVER,TPLUS1,NYSENAT,PSX"

Option chains

To collect option chains from Interactive Brokers, first collect listings for the underlying securities:

$ quantrocket master collect-ibkr --exchanges 'NASDAQ' --sec-types 'STK' --symbols 'GOOG' 'FB' 'AAPL'

status: the IBKR listing details will be collected asynchronously

>>> from quantrocket.master import collect_ibkr_listings

>>> collect_ibkr_listings(exchanges="NASDAQ", sec_types=["STK"], symbols=["GOOG", "FB", "AAPL"])

{'status': 'the IBKR listing details will be collected asynchronously'}

$ curl -X POST 'http://houston/master/securities/ibkr?exchanges=NASDAQ&sec_types=STK&symbols=GOOG&symbols=FB&symbols=AAPL'

{"status": "the IBKR listing details will be collected asynchronously"}

Then request option chains by specifying the sids of the underlying stocks. In this example, we download a file of the underlying stocks and pass it as an infile to the options collection endpoint:

$ quantrocket master get -e 'NASDAQ' -t 'STK' -s 'GOOG' 'FB' 'AAPL' | quantrocket master collect-ibkr-options --infile -

status: the IBKR option chains will be collected asynchronously

>>> from quantrocket.master import download_master_file, collect_ibkr_option_chains

>>> import io

>>> f = io.StringIO()

>>> download_master_file(f, exchanges=["NASDAQ"], sec_types=["STK"], symbols=["GOOG", "FB", "AAPL"])

>>> collect_ibkr_option_chains(infilepath_or_buffer=f)

{'status': 'the IBKR option chains will be collected asynchronously'}

$ curl -X GET 'http://houston/master/securities.csv?exchanges=NASDAQ&sec_types=STK&symbols=GOOG&symbols=FB&symbols=AAPL' > nasdaq_mega.csv

$ curl -X POST 'http://houston/master/options/ibkr' --upload-file nasdaq_mega.csv

{"status": "the IBKR option chains will be collected asynchronously"}

Once the options collection has finished, you can query the options like any other security:

$ quantrocket master get -s 'GOOG' 'FB' 'AAPL' -t 'OPT' --outfile 'options.csv'

>>> from quantrocket.master import get_securities

>>> options = get_securities(symbols=["GOOG", "FB", "AAPL"], sec_types=["OPT"])

$ curl -X GET 'http://houston/master/securities.csv?symbols=GOOG&symbols=FB&symbols=AAPL&sec_types=OPT' > options.csv

Option chains often consist of hundreds, sometimes thousands of options per underlying security. Requesting option chains for large universes of underlying securities, such as all stocks on the NYSE, can take numerous hours to complete.

Sharadar

Sharadar listings are automatically collected when you collect Sharadar fundamental or price data, but they can also be collected separately. Specify the country (US):

$ quantrocket master collect-sharadar --countries 'US'

countries:

US: successfully loaded US securities

status: success

>>> from quantrocket.master import collect_sharadar_listings

>>> collect_sharadar_listings(countries="US")

>>> {'status': 'success', 'countries': {'US': 'successfully loaded US securities'}}

$ curl -X POST 'http://houston/master/securities/sharadar?countries=US'

{"status": "success", "countries": {"US": "successfully loaded US securities"}}

For sample data, use the country code FREE.

An example Sharadar record for AAPL is shown below:

Sid: "FIBBG000B9XRY4"

sharadar_Category: "Domestic"

sharadar_CompanySite: "http://www.apple.com"

sharadar_CountryListed: "US"

sharadar_Currency: "USD"

sharadar_Cusips: 37833100

sharadar_DateDelisted: null

sharadar_Delisted: 0

sharadar_Exchange: "NASDAQ"

sharadar_FamaIndustry: "Computers"

sharadar_FamaSector: null

sharadar_FirstAdded: "2014-09-24"

sharadar_FirstPriceDate: "1986-01-01"

sharadar_FirstQuarter: "1996-09-30"

sharadar_Industry: "Consumer Electronics"

sharadar_LastPriceDate: null

sharadar_LastQuarter: "2020-06-30"

sharadar_LastUpdated: "2020-07-03"

sharadar_Location: "California; U.S.A"

sharadar_Name: "Apple Inc"

sharadar_Permaticker: 199059

sharadar_RelatedTickers: null

sharadar_ScaleMarketCap: "6 - Mega"

sharadar_ScaleRevenue: "6 - Mega"

sharadar_SecFilings: "https://www.sec.gov/cgi-bin/browse-edgar?action=getcompany&CIK=0000320193"

sharadar_Sector: "Technology"

sharadar_SicCode: 3571

sharadar_SicIndustry: "Electronic Computers"

sharadar_SicSector: "Manufacturing"

sharadar_Ticker: "AAPL"

US Stock

All plans include access to historical intraday and end-of-day US stock prices. US stock listings are automatically collected when you collect the price data, but they can also be collected separately.

$ quantrocket master collect-usstock

msg: successfully loaded US stock listings

status: success

>>> from quantrocket.master import collect_usstock_listings

>>> collect_usstock_listings()

{'status': 'success', 'msg': 'successfully loaded US stock listings'}

$ curl -X POST 'http://houston/master/securities/usstock'

{"status": "success", "msg": "successfully loaded US stock listings"}

An example US stock record for AAPL is shown below:

Sid: "FIBBG000B9XRY4"

usstock_CIK: 320193

usstock_DateDelisted: null

usstock_FirstPriceDate: "2007-01-03"

usstock_Industry: "Hardware & Equipment"

usstock_LastPriceDate: null

usstock_Mic: "XNAS"

usstock_Name: "APPLE INC"

usstock_PrimaryShareSid: null

usstock_Sector: "Technology"

usstock_SecurityType: "Common Stock"

usstock_SecurityType2: "Common Stock"

usstock_Sic: "Electronic Computers"

usstock_SicCode: 3571

usstock_SicDivision: "Manufacturing"

usstock_SicIndustryGroup: "Computer And Office Equipment"

usstock_SicMajorGroup: "Industrial And Commercial Machinery And Computer Equipment"

usstock_Symbol: "AAPL"

US Stock security types

In order of granularity from least granular to most granular, the available security type fields are SecType (always 'STK' for this dataset), usstock_SecurityType2, and usstock_SecurityType. The usstock_SecurityType2 field is the one most often used for filtering universes to certain security types. Among the most common values for usstock_SecurityType2 are "Common Stock", "Mutual Fund" (ETFs), "Depositary Receipt" (ADRs), and "Preferred Stock". To see all possible choices:

from quantrocket.master import get_securities

securities = get_securities(vendors="usstock", fields="usstock*")

securities.groupby([securities.usstock_SecurityType2, securities.usstock_SecurityType]).usstock_Symbol.count()

Primary share class

Some companies trade under multiple share classes. For example, Alphabet (Google) trades under two different share classes with different voting rights, "GOOGL" (A shares) and "GOOG" (C shares). The usstock_PrimaryShareSid field provides a link from the secondary share to the primary share. In the case of Alphabet, "GOOGL" is considered the primary share and "GOOG" the secondary share, so the usstock_PrimaryShareSid field for "GOOG" points to the Sid of "GOOGL". If usstock_PrimaryShareSid is null, this indicates that the security is the primary share class (which could be because it is the only share class).

The most common use of the usstock_PrimaryShareSid field is to deduplicate companies with multiple share classes, to avoid trading multiple highly correlated securities from the same company. This can be achieved by filtering your universe to securities where usstock_PrimaryShareSid is null.

Note that usstock_PrimaryShareSid is only populated when the secondary and primary shares have the same security type (based on usstock_SecurityType2). Links between "Common Stock" and "Preferred Stock" (for example) are not provided. If you wish to determine links across security types, you can use the usstock_CIK field for this purpose. The CIK (Central Index Key) is a company-level identifier used in SEC filings and thus is the same for all securities associated with a single company.

Master file

After you collect listings, you can download and inspect the master file, querying by symbol, exchange, currency, sid, or universe. When querying by exchange, you can use the MIC as in the following example (preferred), or the vendor-specific exchange code:

$ quantrocket master get --exchanges 'XNAS' 'XNYS' -o listings.csv

$ csvlook listings.csv

| Sid | Symbol | Exchange | Country | Currency | SecType | Etf | Timezone | Name |

| -------------- | ------ | -------- | ------- | -------- | ------- | --- | ------------------- | -------------------------- |

| FIBBG000B9XRY4 | AAPL | XNAS | US | USD | STK | 0 | America/New_York | APPLE INC |